Let’s get one thing straight if you haven’t been paying attention to multimodal generative AI, you’re already behind the curve. While everyone’s been obsessing over chatbots that can write your emails, the real revolution has been quietly brewing in the background: AI systems that don’t just master one party trick but can seamlessly juggle text, images, audio, and video like a tech-powered Renaissance person.

Think of it as the difference between a one-hit wonder and a multi-talented performer. Traditional AI might nail that chart-topping single, but multimodal AI is dropping entire albums across genres. And trust me, this versatility isn’t just impressive—it’s about to reshape everything from how doctors diagnose diseases to how you shop for those limited-edition sneakers you definitely don’t need (but absolutely deserve).

What’s the Big Deal About Multimodal AI?

Generative AI has already changed the game by creating new content rather than just analyzing existing data. But multimodal generative AI takes this to an entirely different level by integrating various data types or “modalities” like text, images, audio, and video to generate outputs that blend these different forms of information.

The significance of this tech in our current landscape is skyrocketing. By enabling AI to understand and generate content across different modalities, these systems are becoming dramatically more robust and versatile, delivering responses that are contextually relevant and accurate in ways that single-mode AI simply can’t match.

This isn’t just incremental progress it’s a paradigm shift that’s moving us toward more intuitive and natural interfaces between humans and machines. If you’re keeping tabs on cutting-edge generative AI applications that are transforming industries this year, multimodal AI is driving many of the most impressive breakthroughs.

Under the Hood: How Multimodal AI Actually Works

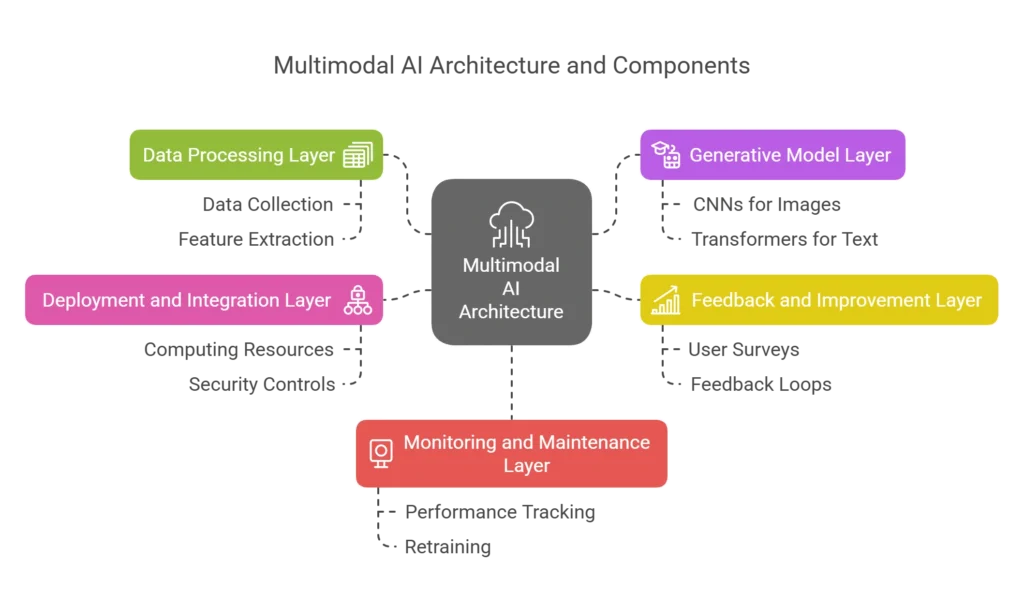

Before you get too starry-eyed about the possibilities, let’s break down how these complex systems actually function. A typical multimodal generative AI architecture consists of several essential layers, each playing a crucial role in creating those impressively cohesive outputs.

The data processing layer handles the dirty work collecting, preparing, and processing diverse information. This involves gathering data from various sources, cleaning it up, and normalizing the aggregated data. Feature extraction then removes the noise, allowing the model to focus on the most pertinent elements from each modality.

The generative model layer is where the AI gets its education. Various models are employed depending on the use case, with specialized approaches for different data types. Convolutional Neural Networks (CNNs) often handle image processing, while Transformer networks typically manage text-based data.

The feedback and improvement layer keeps the model in check, optimizing efficiency and accuracy. User surveys and interaction analysis help developers assess performance, while feedback loops identify output errors and provide corrective inputs back into the model.

When it’s time for deployment, the deployment and integration layer sets up the necessary infrastructure, including specialized computing resources and security controls. And post-launch, the monitoring and maintenance layer keeps everything running smoothly through performance tracking and occasional retraining.

Several fundamental models are commonly used in multimodal applications:

- Large Language Models (LLMs) process and generate textual information, often integrating with other modalities through techniques like vision-language models

- Variational Autoencoders (VAEs) leverage encoder-decoder architecture to generate images and synthetic data

- Generative Adversarial Networks (GANs) excel at producing realistic images and video

Various architectural approaches are used to achieve multimodality, with the encoder-decoder framework and transformer-based architectures being particularly common. Transformer-based architectures have become central due to their ability to handle sequential data and capture long-range dependencies across modalities using attention mechanisms.

Under the Hood: How Multimodal AI Actually Works

Hover or click to explore the core layers of multimodal AI systems.

Select a layer to learn more about it.

Making Different Data Types Play Nice Together

The secret sauce of multimodal AI lies in how it gets different types of data to understand each other like a translator at an international summit ensuring everyone’s on the same page despite speaking different languages.

Several data fusion strategies make this possible:

| Technique | Description | Advantages | Disadvantages |

|---|---|---|---|

| Early Fusion | Combines raw data from different modalities before feature extraction | Captures low-level correlations | Challenging with asynchronous or differently structured data |

| Mid Fusion | Combines modality-specific features at intermediate processing stages | Allows initial modality-specific processing | May struggle with fundamental differences in modality structure |

| Late Fusion | Processes each modality independently and combines their final outputs | Robust to modality-specific noise | May miss early interactions between modalities |

| Hybrid Fusion | Combines different fusion strategies at various stages of the model | Leverages the strengths of different techniques | Can be complex to design and implement effectively |

Attention mechanisms and transformer architectures play a pivotal role in effective integration. Cross-modal attention enables models to dynamically focus on the most relevant information across different modalities. For example, in a text-to-image generation task, the textual description (query) attends to the features of the image being generated (key-value), allowing the model to focus on the visual elements most relevant to the text.

Transformer architectures, with their self-attention and cross-attention layers, are particularly well suited for capturing both relationships within a single modality and relationships between different modalities. This capability allows the model to dynamically weigh the importance of different parts of the input modalities, leading to better alignment and fusion of information.

From Sci-Fi to Reality: How Industries Are Being Transformed

Multimodal generative AI isn’t just theoretical it’s already reshaping major industries in tangible ways.

Healthcare: Personalized Medicine Gets Real

In healthcare, multimodal AI is enhancing diagnostics, treatment planning, and patient care by integrating data from Electronic Health Records (EHRs), medical imaging, and patient notes. These systems can analyze X-ray and MRI images alongside a patient’s medical history to detect early signs of illness and cross-reference pathology reports with genetic data for more precise treatment recommendations.

IBM Watson Health exemplifies this approach, integrating diverse medical data to aid in accurate disease diagnosis and personalized treatment plans. The ability to process and synthesize diverse medical data offers a more comprehensive view of patient health, leading to more accurate diagnoses and tailored treatment strategies.

E-commerce: Taking Shopping Experiences to the Next Level

Online retailers are using multimodal AI to personalize shopping experiences and streamline content creation by combining user interactions, product visuals, and customer reviews. They can generate customized product videos and tutorials while enhancing product recommendations by analyzing both visual attributes and textual data like customer reviews.

Amazon, for instance, utilizes multimodal AI to improve packaging efficiency. By analyzing diverse data sources, e-commerce platforms provide more accurate suggestions, optimize product placement, and boost overall customer satisfaction. As I discussed in my comprehensive guide to generative AI applications, e-commerce is one of the sectors seeing the most dramatic transformations from these technologies.

Entertainment: Reimagining Content Creation

The entertainment industry is witnessing a revolution in content generation, interactive experiences, and personalization. These systems can generate movie scenes based on user preferences, create interactive content and personalized fan messages, and even develop AI-generated characters for immersive storytelling.

Additionally, automated video editing and poster generation are becoming feasible, while semantic search capabilities in media archives are being enhanced, allowing for more intuitive content discovery. Google’s Gemini model can even generate recipes from images of food a perfect example of how these technologies are breaking down barriers between different types of media.

Automotive: Driving Innovation (Pun Intended)

The automotive sector is significantly benefiting from multimodal AI, particularly in the advancement of autonomous driving and safety systems. By integrating data from various sensors, cameras, radar, and lidar, these systems enhance real-time navigation and decision-making in complex driving scenarios.

Furthermore, generative AI can create realistic simulations of countless driving situations, including dangerous and exceptional scenarios, to improve the safety of autonomous vehicle control algorithms before they’re deployed on public roads. This comprehensive understanding of the driving environment is essential for developing safer and more reliable autonomous vehicles.

Education: Learning Gets Personal

Multimodal AI is transforming education by creating personalized and engaging environments through the integration of text, video, and interactive content. Instructional materials can be customized to match individual student needs and learning preferences.

Duolingo, for example, utilizes multimodal AI to improve its language-learning software by fusing text, audio, and visual elements to create interactive and individualized courses. Additionally, these systems can summarize video classes and track facial expressions in online classrooms to gauge student engagement. This approach has the potential to significantly enhance learning outcomes through more personalized and interactive educational experiences something I explored in my article about generative AI applications transforming various industries.

The Hurdles We Still Need to Overcome

Despite the impressive capabilities of multimodal generative AI, several significant challenges remain in training and deploying these systems.

One key hurdle lies in addressing data heterogeneity and synchronization issues. Data from different modalities inherently varies in format, structure, and sampling rates. Text is sequential and symbolic, while images are spatial and pixel-based. Ensuring temporal and contextual alignment between these diverse data types is crucial but often difficult.

Another major challenge involves the computational and memory intensive training requirements of large multimodal models. These models often have a significantly larger number of parameters compared to unimodal models, especially when combining architectures like vision transformers for images with language models for text. Training such models requires vast amounts of data and specialized hardware, such as GPUs or TPUs, making it computationally expensive and potentially inaccessible to smaller teams or researchers with limited resources.

Ensuring data quality, consistency, and addressing missing data are also critical challenges. Obtaining high-quality, consistently labeled datasets that cover all the relevant modalities can be time-consuming and costly. Furthermore, real-world datasets often contain incomplete information, where some entries might be missing data for certain modalities.

Mitigating biases and ensuring fairness across modalities is another significant concern. Biases present in the training data of one or more modalities can be amplified within the multimodal system, potentially leading to unfair or discriminatory outcomes. Therefore, careful attention must be paid to the composition of the training data and the design of the model to identify and mitigate these biases, ensuring fairness across different demographic groups and modalities.

The Broader Impact: Ethics, Society, and Economics

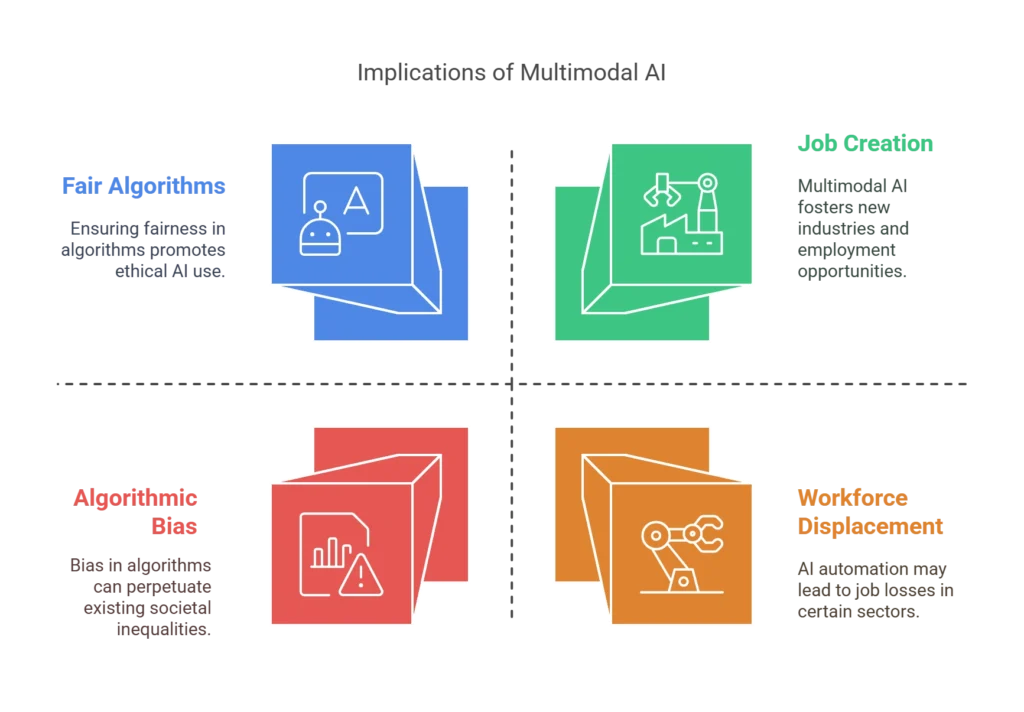

The increasing prevalence of multimodal AI brings forth a range of ethical, societal, and economic implications that warrant careful consideration.

On the ethical front, bias in algorithms is a significant concern, as multimodal AI systems that integrate data from multiple sources risk amplifying biases present in any of those data types. Ensuring the development of fair and transparent algorithms is therefore paramount. Privacy is another critical ethical consideration, given that multimodal AI systems often rely on diverse and potentially sensitive information, such as medical records or personal communications.

From a societal perspective, these systems are leading to changes in human-technology interaction, fostering the development of more natural and intuitive interfaces that mimic human communication. Multimodal interfaces can also increase accessibility for individuals with disabilities by providing alternative ways to interact with technology. However, there is also the potential for the spread of misinformation through AI-generated content, highlighting the need for increased digital literacy and effective content moderation strategies.

Economically, the adoption of multimodal AI is expected to have significant effects on labor markets, as AI-powered automation may displace workers in certain roles, necessitating investments in retraining and upskilling initiatives. Simultaneously, the growth of the multimodal AI field will likely lead to the creation of new industries and job roles. As I noted in my exploration of generative AI applications, this technology is both disrupting existing business models and creating entirely new opportunities.

What’s Next: Forecasting the Future

The field of multimodal generative AI is poised for significant advancements and transformations in the coming years. One notable trend is the rise of unified multimodal models, which are designed to handle and generate content across multiple modalities seamlessly within a single architecture. Models like GPT-4o and Gemini exemplify this direction, offering the ability to process and generate text, images, audio, and video in an integrated manner.

Ongoing research is focused on advancements in cross-modal interaction and understanding, aiming to improve the alignment and fusion of data from different formats. Future developments will likely involve more sophisticated attention mechanisms and cross-modal learning techniques that enable AI to better understand the intricate relationships between various data types.

There is an increasing demand for real-time multimodal processing in applications such as autonomous driving and augmented reality. Achieving low-latency processing of data from multiple sensors and sources will be crucial for the widespread adoption of these technologies.

Another important trend is the emergence of smaller, more efficient multimodal models. While large language models have shown impressive capabilities, their size and computational demands can be prohibitive for many applications. The development of smaller language models (SLMs) with multimodal capabilities offers a more cost-effective and practical solution for deployment on local devices like smartphones and IoT devices, democratizing access to this technology.

Could This Be the Path to Artificial General Intelligence?

Some experts believe that multimodal AI represents a crucial step towards achieving Artificial General Intelligence (AGI)—a hypothetical AI system that possesses human-like cognitive abilities across a wide range of tasks.

Human learning is inherently multimodal, relying on the integration of information from multiple senses. By enabling AI to process and understand the world in a similar way, through the convergence of text, vision, audio, and other modalities, multimodal AI aims to mimic this fundamental aspect of human intelligence. This capability allows for more comprehensive and nuanced problem-solving, as AI can leverage information from diverse sources to gain a deeper understanding of context and subtleties.

However, there are also counterarguments to consider. Some experts argue that current multimodal AI, particularly those based on large language models (LLMs), have inherent limitations in areas such as real-time adaptability, memory integration, and energy efficiency compared to biological intelligence. Concerns have been raised that LLMs primarily regurgitate information they were trained on and may lack true reasoning or understanding.

Final Thoughts

Multimodal generative AI represents a significant leap forward in artificial intelligence, moving beyond single-modality processing to integrate and generate content across diverse data types. The applications of this technology are already transforming industries from healthcare to entertainment, with the potential for even greater impact in the coming years.

Despite the challenges in training and deploying these systems, ongoing research and development are addressing these hurdles and pushing the boundaries of what’s possible. The ethical, societal, and economic implications of widespread adoption require careful consideration to ensure that multimodal AI benefits society as a whole.

Whether or not multimodal AI leads directly to AGI, it undoubtedly represents a significant step toward more human-like artificial intelligence. As the field continues to evolve, we can expect to see increasingly sophisticated systems that further blur the lines between different modalities and revolutionize how we interact with technology.

FAQs

What exactly is multimodal generative AI?

Multimodal generative AI refers to artificial intelligence systems that can process and generate content across multiple types of data (modalities) such as text, images, audio, and video. Unlike traditional AI systems that typically handle only one type of data, multimodal AI integrates these different forms of information to create outputs that combine these modalities in a coherent way.

How is multimodal AI different from regular generative AI?

While regular generative AI focuses on creating new content within a single data type (like text-only or image-only), multimodal AI can understand and generate content that spans multiple data types simultaneously. This allows for more complex and nuanced outputs that better mirror how humans perceive and interact with the world.

What industries are being most affected by multimodal AI?

Several industries are seeing significant transformation, including healthcare (through improved diagnostics and personalized medicine), e-commerce (with enhanced shopping experiences), entertainment (via advanced content creation), automotive (for autonomous driving systems), education (through personalized learning), and finance (with better fraud detection and risk assessment).

What are the biggest challenges facing multimodal AI development?

Key challenges include: data heterogeneity and synchronization issues across different modalities; the intensive computational requirements for training large models; ensuring data quality and addressing missing data; mitigating biases across modalities; and improving the interpretability of these complex systems.

Is multimodal AI bringing us closer to Artificial General Intelligence (AGI)?

While multimodal AI represents a significant step toward more human-like AI by mimicking our ability to integrate information from multiple senses, experts are divided on whether it will lead directly to AGI. Some believe it’s a crucial building block, while others argue that achieving true AGI will require fundamental breakthroughs in areas such as reasoning, consciousness, and adaptability that go beyond current multimodal capabilities.